Pixels

Pixels, like many things in the computer industry are not self-evident. The human eye seems naturally to analyse things into lines and spaces. The eye is arguably based on several million irregular sized and shaped pixels but nerve-circuits within the eye and then a mass of circuitry in the visual cortex and brain analyse what is seen into lines and then concepts.

The word pixel

meaning a picture element first comes into use in the computer industry in the 1960s, although it had apparently been used sporadically before.

The concept isn't entirely new. Artists such as Georges Seurat had developed Pointilism in the 1880s. the concept of breaking up an image into swaths or a raster had been explored by Alexander Baine as early as 1843 and a reliable way to do it was proposed in the 1880s with the Nipkow Disc

.

Mechanical Imaging

One of the first examples of using machinery to break an image apart is Alexander Bain's telegraph of 1843; it might be considered the first facsimile machine. Mechanical scanning is best done by a continual processes. The Nipkow disk (1884) is a rotating disk with a spiral of holes which can be used to scan an image in and out of a machine. Refinements on this principle were often used to produce the early mechanical televisions.

Early mechanical TV cameras had a problem with light sensors which were nowhere near sensitive enough. The flying spot camera shone a bright pinpoint of light onto the scene and scanned it back and forth with a fast rotating Nipkow disk for scanning and a mirror for frames. A group of selenium photocells responded when there was reflected light, but when the spot hit dark material the light was absorbed and the level on the photocells fell. This worked to some extent if the room was largely dark, but actors had problems working in the dark.

Scanning a scene by using a spot of light and a set of unfocused detectors is unusual but not completely unknown today.

Electro-optic visual processing using lasers and mirrors is not unusual, this is how laser cutters and laser displays work. So far, however the fairly powerful lasers to do this kind of thing have remained quite expensive. They are also somewhat dangerous, an incautious action can easily result in damaged sight.

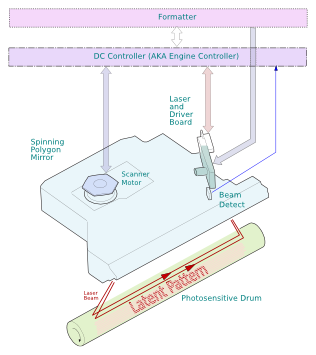

Sketch of a laser scanner mechanism.

Mechanical video processing is still commonplace: it is used in laser-printers where a polygon mirror scans the image onto the page and in some colour digital light processors. Lidar captures a 3D image with depth measurements using mirrors to aim the beam. Document scanners generally push a CCD linear sensor down the page - the horizontal dimension is read electronically and the vertical dimension is achieved by pushing the motor a step onwards and reading it again. Mechanical things tend to be fragile and wear out.

Electronic Imaging

Electronic methods can be preferable to mechanical, because there is nothing to wear out. After 1939 experiments with TV ceased for a few years as wartime requirements took over. When experiments and factories restarted mechanical methods were dropped - wartime Radar production had made CRTs (Cathode Ray Tubes) the best option for camera tubes and display screens.

CRTs were a long lasting technology. A "tube" is basically shaped like a chemists flask on it's side. An electron gun in the neck points at a wash of phosphor or a pattern of colour emitting phosphor dots over what would be the base but is actually the screen. Where the electron beam strikes the phosphor lights up. The CRT electron beam can be steered by electromagnetic coils and that is what normally happens in a TV.

Sketch of a CRT.

Radar was an expensive military technology and until recently remained so. Used as the basis for televisions, CRTs proved surprisingly affordable. Many households in the US and Europe got mono TVs in the 1950s. In Britain a great incentive was the Queen's coronation. Colour TV sets were more widely available in the US than elsewhere but there was initially a high cost in purchase price and reliability for the triplicate circuitry to give colours. Americas NTSC colour broadcast standard was a benefit at first but then standards stuck and they were unable to escape from it. In a reference to the poor colour rendering NTSC was jokingly rechristened "never the same colour twice". Different countries adopted other standards, partly feeling they were better but also in attempt to protect their home market from foreign competition. By the 1970s Asian producers were making products for all the national standards and changing a few components; so the plans' protectionist aspec largely failed.

In the 1970s and onwards CRTs were adopted as computer monitors. Production economies of scale meant that early designs were often based on TV tubes and only in the 1990s when the market was proving to be huge were special tubes widely available. CRT monitors are easily recognised, a bowed front glass and the box having a depth nearly as great as the screen width are give-aways.

Sketch of an LCD.

LCD screens have largely killed the CRT market because they can have a large screen area whilst the box is relatively flat. They can be hung on the wall like a picture, although some people find that an uncomfortable viewing angle.

LCD's work by bending light in Liquid Crystal pixel elements. The principle actually dates back to the 19th century but was neglected until the late 1960s when it's low power requirements started to attract attention. The first LCD screens appeared as segment displays for calculators and voltmeters in the 1970s, then as screens on portable computers. Early screens competed with little CRTs, plasma displays and even LED line displays.

Around 2003 the benefits of LCDs were showing. The screen is flat, so it occupies much less desk and floor space. The display tends to be relatively steady, indeed LCDs had a problem changing brightness rapidly which meant an action packed game event could blur; but this was a benefit for text. The inflexion point where LCDs began to dominate over CRTs was around 2004; in 2014 you simply don't see CRT monitors or TVs being offered in the shops.

Electronic Progress

For about 20 years CRT monitors for desktop computers overlapped with more expensive and smaller LCD in laptop and notebook machines. There were challenger technologies:

- Plasma seems to be dying, colour versions use too much power, run hot and aren't reliable or repairable - CRTs had these defects but the circuitry often could be repaired. .

- OLED monitors suffer from image burn in the materials - something which also afflicted CRTs. They are also difficult to make reliably on a large scale

- There are a host of technologies that big companies have bet billions on, only to find that LCDs with lower price and better performance took the market.

Some mechanical systems continue to overlap with video, for instance printer mechanisms are almost inherently part mechanical.

Both Lexmark and HP have marketed MFP printers with two-dimensional high resolution camera chips rather than scanners. Camera images are produced by "solid state" chips and displayed on LCD screens. Inkjet printers are beginning to move to having one pagewidth static head as in the Lomond and OfficeJet XL machines. OKI invariably replace the laser printer scanning head with an LED array.

A limit on handling video as pixels has always been the memory and communication requirements. This is particularly true for television where older equipment had to repeat the fields 25 times per second in Europe and 30 in the US (to fit in with the power frequency). Broadcast TV was limited to several hundred lines because otherwise the bandwidth required to transmit a signal would monopolise the airwaves.

TV had to continually repeat a scene because there was no satisfactory way to store and then update it. Even if the scene was static, like a test card, it was continually repeated.

The first computer display screens used electro-mechanical delay-lines to store the character images. These were replaced in the 1970s by character generators and clever devices like the Tektronix 4014 which used the CRT as a memory. Colour displays at TV like resolution around 640x480 and 800 x 600 were available in arcade games in the late 1970s but only became affordable in home computers with the IBM VGA adaptor in 1986. Even VGA had a raft of compromises to fit a small memory to a large screen such as the use of pallettes and reduced resolutions.

Apple's Laserwriter printer launched in 1985 needed over a megabyte of memory to hold the 300dpi page-image ready for printing. This made the printer rather expensive at over $6000 - twice the price but 8 times the memory of the HP LaserJet. Both printers used the same Canon chassis but where the Apple product could print almost any image you could throw at the machine the HP with just 128 kilobytes of memory was largely limited to text produced by character-gnerator routines.

Memory and network bandwidth restrictions on what can be done with bitmaps are beginning to fade.

An A4 laser-printer page in colour at 300 dpi will need at least 4 megabytes of memory - a magabyte for each of the four colours Cyan, Magenta, Yellow and Black. It might potentially need sixteen times that if it can print at 1200 dpi (64 megabytes) and that might double again if pixels can have two levels of grey-scale.

However since 1 gigabyte of bog-standard PC memory now costs about £ten the cost of memory has recently ceased to be much of a problem. One remaining problem is that printer makers tend to use slightly wierd chipsets that won't work with standard PC memory - but that is another story.

Copyright G & J Huskinson & MindMachine Associates Ltd 2013

Print Index

Print Index